More MLCC learning

It seems that I’m learning much about PCB design the very hard way. Back last year I wrote up my discovery of MLCC bias derating. Now I’ll share some of my experiences with MLCC cracking on the first production moteus controllers.

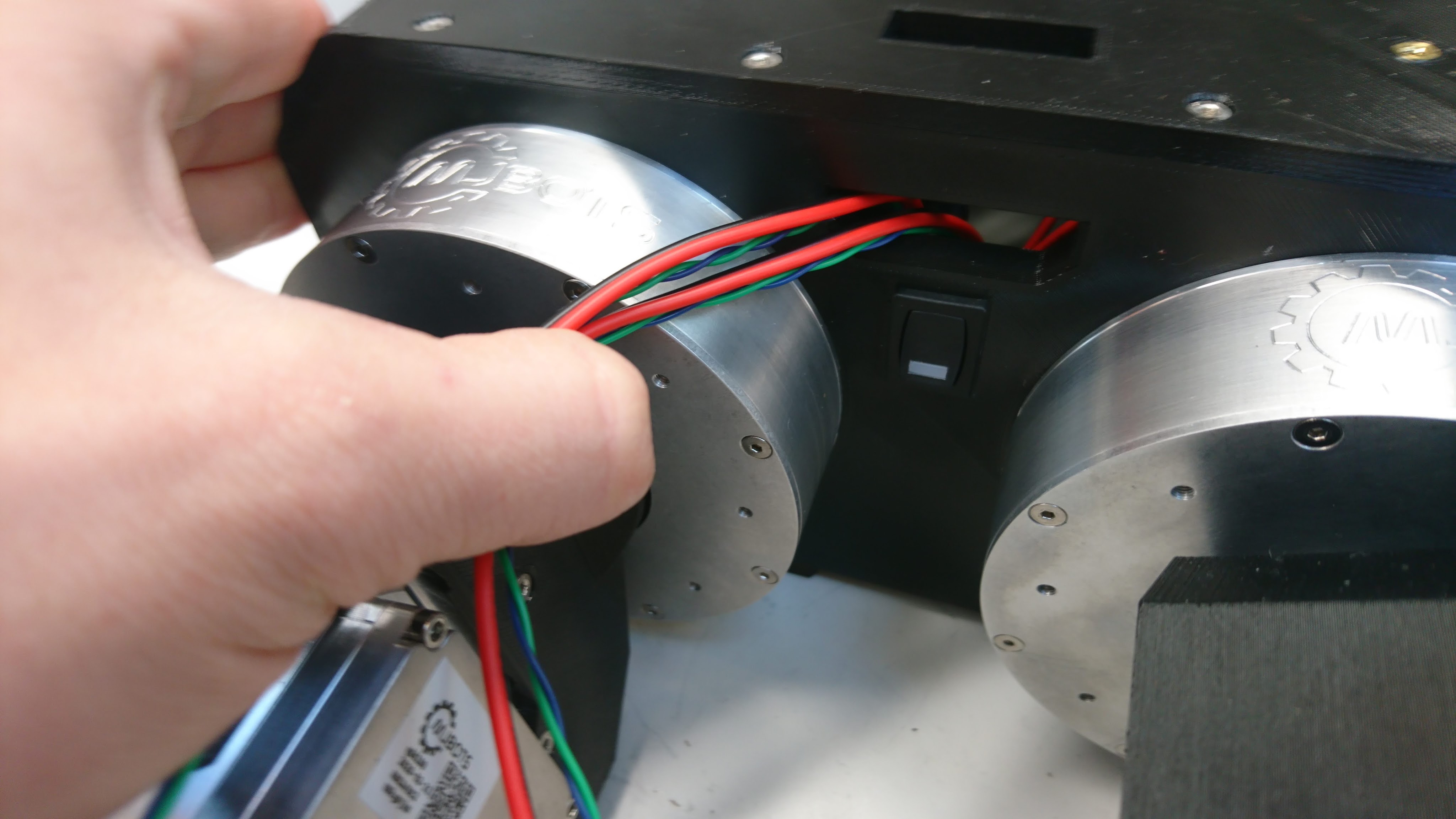

When I was first putting the production moteus controllers through their test and programming sequence, I observed a failure mode that I had yet to have observe in my career (which admittedly doesn’t include much board manufacturing). When applying voltage, I got a spark and puff of magic smoke from near one of the DC link capacitors on the left hand side. In the first batch of 40 I programmed, a full 20% failed in this way, some at 24V, and a few more at a 38V test. I initially thought the problem might have been an etching issue resulting in voltage breakdown between a via and an internal ground plane, but after examining the results under the microscope and conferring with MacroFab determined the most likely cause was cracking of the MLCCs during PCB depanelization.